New York City, before September 11, 2001, via Library of Congress.

*The American Yawp is currently in beta draft. Please click here to help improve this chapter*

I. Introduction

Time marches forever on. The present becomes the past and the past becomes history. But, as William Faulkner wrote, “The past is never dead. It’s not even past.” The last several decades of American history have culminated in the present, an era of innovation and advancement but also of stark partisan division, sluggish economic growth, widening inequalities, widespread military interventions, and pervasive anxieties about the present and future of the United States. Through boom and bust, national tragedy, foreign wars, and the maturation of a new generation, a new chapter of American history awaits.

II. American Politics from George H.W. Bush to September 11, 2001

The conservative “Reagan Revolution” lingered over an open field of candidates from both parties as voters approached the presidential election of 1988. At stake was the legacy of a newly empowered conservative movement, a movement that would move forward with Reagan’s vice president, George H. W. Bush, who triumphed over Massachusetts Governor Michael Dukakis with a promise to continue the conservative work that had commenced in the 1980s.

George H. W. Bush was one of the most experienced men every to rise to the presidency. Bush’s father, Prescott Bush, was a United States Senator from Connecticut. George H. W. Bush served as chair of the Republican National Committee, Director of the Central Intelligence Agency, and was elected to the House of Representatives from his district in Texas. He was elected vice president in 1980 and president eight years later. His election signaled Americans’ continued embrace of Reagan’s conservative program.

The dissolution of the Soviet Union left the United States as the world’s only remaining superpower. Global capitalism seemed triumphant. The 1990s brought the development of new markets in Southeast Asia and Eastern Europe. Observers wondered if some final stage of history had been reached, if the old battles had ended, a new global consensus had been reached and a future of peace and open markets would reign forever.

The post-Cold War world was not without international conflicts. Congress granted President Bush approval to intervene in Kuwait in Operation Desert Shield and Operation Desert Storm, commonly referred to as the first Gulf War. With the memories of Vietnam still fresh, many Americans were hesitant to support military action that could expand into a protracted war or long-term commitment of troops. But the war was a swift victory for the United States. President Bush and his advisers opted not to pursue the war into Baghdad and risk an occupation and insurgency. And so the war was won. Many wondered if the “ghosts of Vietnam” had been exorcised. Bush won enormous popularity. Gallup polls showed a job approval rating as high as 89% in the weeks after the end of the war.

The Iraqi military set fire to Kuwait’s oil fields during the Gulf War, many of which burned for months and caused massive pollution. Photograph of oil well fires outside Kuwait City, March 21, 1991. Wikimedia, http://commons.wikimedia.org/wiki/File:Operation_Desert_Storm_22.jpg.

President Bush’s popularity seemed to suggest an easy reelection in 1992. Bush faced a primary challenge from political commentator Patrick Buchanan, a former Reagan and Nixon White House adviser, who cast Bush as a moderate, an unworthy steward of the conservative movement who was unwilling to fight for conservative Americans in the nation’s ongoing “culture war.” Buchanan did not defeat Bush in the Republican primaries, but he inflicted enough damage to weaken his candidacy.

The Democratic Party nominated a relative unknown, Arkansas Governor Bill Clinton. Dogged by charges of marital infidelity and draft-dodging during the Vietnam War, Clinton was a consummate politician and had both enormous charisma and a skilled political team. He framed himself as a “New Democrat,” a centrist open to free trade, tax cuts, and welfare reform. Twenty-two years younger than Bush, and the first Baby Boomer to make a serious run at the presidency, Clinton presented the campaign as a generational choice. During the campaign he appeared on MTV. He played the saxophone on the Arsenio Hall Show. And he told voters that he could offer the United States a new way forward.

Bush ran on his experience and against Clinton’s moral failings. The GOP convention in Houston that summer featured speeches from Pat Buchanan and religious leader Pat Robertson decrying the moral decay plaguing American life. Clinton was denounced as a social liberal that would weaken the American family with his policies and his moral character. But, Clinton was able to convince voters that his moderated Southern brand of liberalism would be more effective than the moderate conservatism of George Bush. Bush’s candidacy, of course, was most crippled by a sudden economic recession. “It’s the economy, stupid,” Clinton’s political team reminded the country.

Clinton would win the election, but the Reagan Revolution still reigned. Clinton and his running mate, Tennessee Senator Albert Gore, Jr., both moderate southerners, promised a path away from the old liberalism of the 1970s and 1980s. They were Democrats, but ran conservatively.

In his first term Clinton set out an ambitious agenda that included an economic stimulus package, universal health insurance, a continuation of the Middle East peace talks initiated by Bush’s Secretary of State James Baker, welfare reform, and a completion of the North American Free Trade Agreement (NAFTA) to abolish trade barriers between the U.S., Mexico, and Canada.

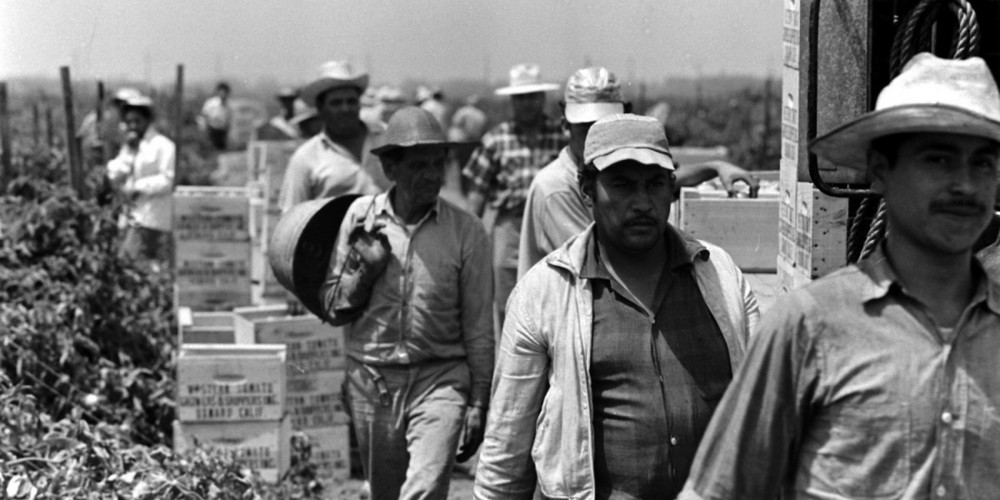

With NAFTA, Clinton, reversed decades of Democratic opposition to free trade and opened the nation’s northern and southern borders to the free flow of capital and goods. Critics, particularly in the Midwest’s Rust Belt, blasted the agreement for opening American workers to deleterious competition by low-paid foreign workers. Many American factories did relocate by setting up shops–maquilas–in northern Mexico that took advantage of Mexico’s low wages. Thousands of Mexicans rushed to the maquilas. Thousands more continued on past the border.

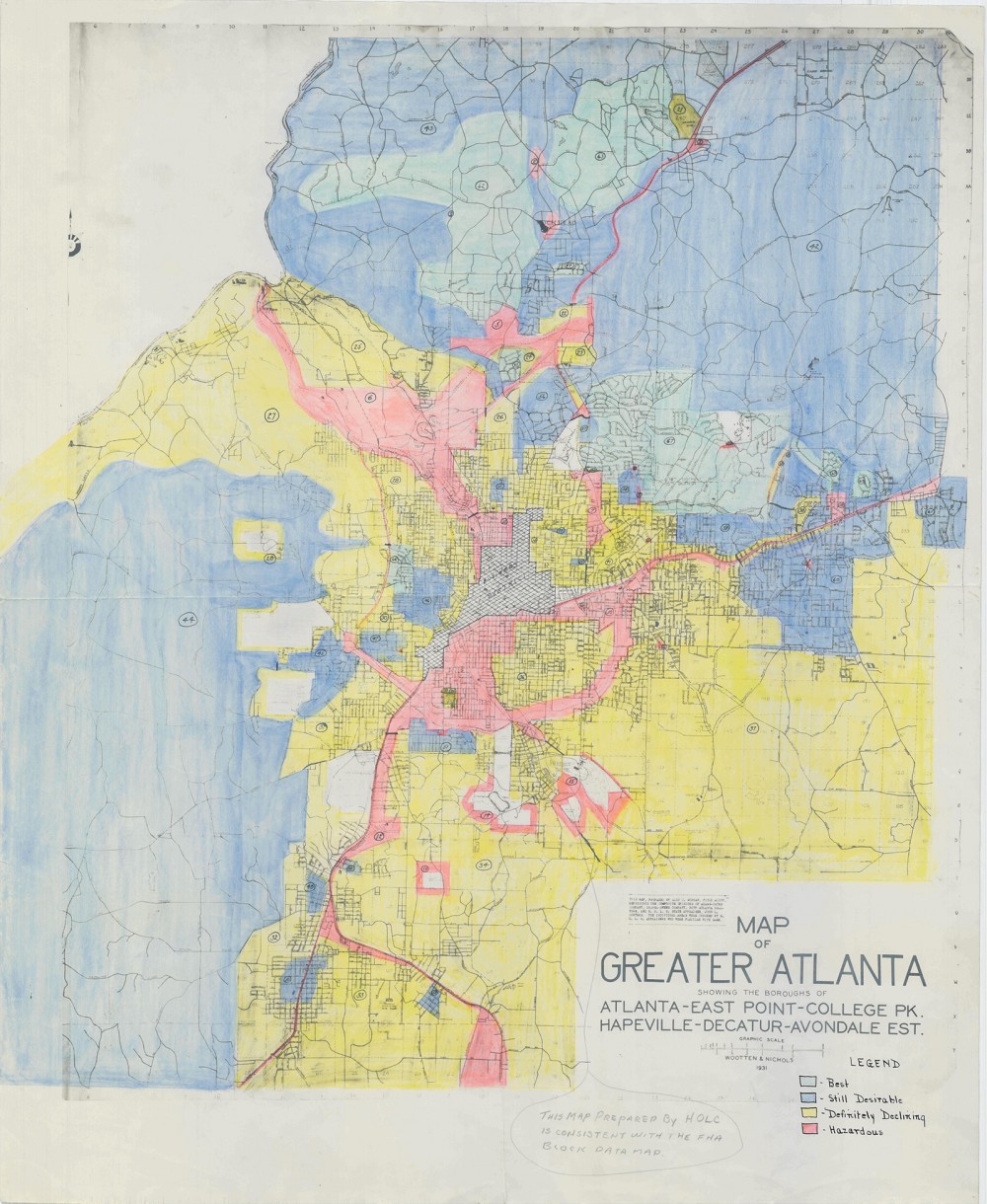

If NAFTA opened American borders to goods and services, people still navigated strict legal barriers to immigration. Policymakers believed that free trade would create jobs and wealth that would incentivize Mexican workers to stay home, and yet multitudes continued to leave for opportunities in el norte. The 1990s proved that prohibiting illegal migration was, if not impossible, exceedingly difficult. Poverty, political corruption, violence, and hopes for a better life in the United States–or simply higher wages–continued to lure immigrants across the border. Between 1990 and 2000, the proportion of foreign-born individuals in the United States grew from 7.9 percent to 12.9 percent, and the number of undocumented immigrants tripled from 3.5 million to 11.2 during the same period. While large numbers continued to migrate to traditional immigrant destinations—California, Texas, New York, Florida, New Jersey, and Illinois—the 1990s also witnessed unprecedented migration to the American South. Among the fastest-growing immigrant destination states were Kentucky, Tennessee, Arkansas, Georgia, and North Carolina, all of which had immigration growth rates in excess of 100% during the decade.

In response to the continued influx of immigrants and the vocal complaints of anti-immigration activists, policymakers responded with such initiatives as Operations Gatekeeper and Hold the Line, which attempted to make crossing the border more prohibitive. By strengthening physical barriers and beefing up Border Patrol presence in border cities and towns, a new strategy of “funneling” immigrants to dangerous and remote crossing areas emerged. Immigration officials hoped the brutal natural landscape would serve as a natural deterrent.

In his first weeks in office, Clinton reviewed Department of Defense policies that restricted homosexuals from serving in the armed forces. He pushed through a compromise plan, “Don’t Ask, Don’t Tell,” that removed any questions about sexual preference in induction interview but also required that gay servicemen and women keep their sexual preference private. Social conservatives were outraged and his credentials as a conservative southerner suffered.

In his first term Clinton put forward universal health care as a major policy goal and put First Lady Hillary Rodham Clinton in charge of the initiative. But the push for a national healthcare law collapsed on itself. Conservatives revolted, the health care industry flooded the airwaves with attack ads, and voters bristled.

The mid-term elections of 1994 were a disaster for the Democrats, who lost the House of Representatives for the first time since 1952. Congressional Republicans, led by Georgia Congressman Newt Gingrich and Texas Congressman Dick Armey, offered a new “Contract with America.” Republican candidates from around the nation gathered on the steps of the Capitol to pledge their commitment to a conservative legislative blueprint to be enacted if the GOP won control of the House. The strategy worked.

Social conservatives were mobilized by an energized group of religious activists, especially the Christian Coalition, led by Pat Robertson and Ralph Reed. Robertson was a television minister and entrepreneur whose 1988 long shot run for the Republican presidential nomination brought him a massive mailing list and network of religiously motivated voters around the country. From that mailing list, the Christian Coalition organized around the country, seeking to influence politics on the local and national level.

In 1996 the generational contest played out again when the Republicans nominated another aging war hero, Senator Bob Dole of Kansas, but Clinton again won the election, becoming the first Democrat to serve back-back terms since Franklin Roosevelt.

Clinton’s presided over a booming economy fueled by emergent computing technologies. Personal computers had skyrocketed in sales and the internet become a mass phenomenon. Communication and commerce were never again the same. But the tech boom was driven by companies and the 90s saw robust innovation and entrepreneurship. Investors scrambled to find the next Microsoft or Apple, the suddenly massive computing companies. But it was the internet, “the world wide web,” that sparked a bonanza. The “dot-com boom” fueled enormous economic growth and substantial financial speculation to find the next Google or Amazon.

Republicans, defeated at the polls in 1996 and 1998, looked for other ways to sink Clinton’s presidency. Political polarization seemed unprecedented as the Republican congress spent millions on investigations hoping to uncover some shred of damning evidence to sink Clinton’s presidency, whether it be real estate deals, White House staffing, or adultery. Rumors of sexual misconduct had always swirled around Clinton, and congressional investigations targeted the allegations. Called to testify before a grand jury and in a statement to the American public, Clinton denied having “sexual relations” with Monica Lewinsky. Republicans used the testimony to allege perjury. Congress voted to impeach the president. It was a radical and wildly unpopular step. On a vote that mostly fell upon party lines, Clinton was acquitted by the Senate.

The 2000 election pit Vice President Albert Gore, Jr. against George W. Bush, the son of the former president who had been elected twice as Texas governor. Gore, wary of Clinton’s recent impeachment despite Clinton’s enduring approval ratings, distanced himself from the president and eight years of relative prosperity and ran as a pragmatic, moderate liberal.

Bush, too, ran as a moderate, distancing himself from the cruelties of past Republican candidates by claiming to represent a “compassionate conservatism” and a new faith-based politics. Bush was an outspoken evangelical. In a presidential debate, he declared Jesus Christ his favorite political philosopher. He promised to bring church leaders into government and his campaign appealed to churches and clergy to get out the vote. Moreover, he promised to bring honor, dignity, and integrity to the Oval Office, a clear reference Clinton. Utterly lacking the political charisma that had propelled Clinton, Gore withered under Bush’s attacks. Instead of trumpeting the Clinton presidency, Gore found himself answering the media’s questions about whether he was sufficiently an “alpha male” and whether he had “invented the internet.”

Few elections have been as close and contentious as the 200 election, which ended in a deadlock. Gore had won the popular vote by 500,000 votes, but the Electoral College math seemed to have failed him. On election night the media had called Florida for Gore, but then Bush made gains and news organizations backpedaled and then they declared the state for Bush—and Bush the probable president-elect. Gore conceded privately to Bush, then backpedaled as the counts edged back toward Gore yet again. When the nation awake the next day, it was unclear who had been elected president. The close Florida vote triggered an automatic recount.

Lawyers descended on Florida. The Gore campaign called for manual recounts in several counties. Local election boards, Florida Secretary of State Kathleen Harris, and the Florida Supreme Court all weighed in until the United Supreme Court stepped in and, in an unprecedented 5-4 decision in Bush v. Gore, ruled that the recount had to end. Bush was awarded Florida by a margin of 537 votes, enough to win him the state, a majority in the Electoral College, and the presidency.

In his first months in office, Bush fought to push forward enormous tax cuts skewed toward America’s highest earners and struggled with an economy burdened by the bursting of the dot-com-bubble. Old fights seemed ready to be fought, and then everything changed.

III. September 11 and the War on Terror

On the morning of September 11, 2001, 19 operatives of the al-Qaeda terrorist organization hijacked four passenger planes on the East Coast. American Airlines Flight 11 crashed into the North Tower of the World Trade Center in New York City at 8:46 a.m. EDT. United Airlines Flight 175 crashed into the South Tower at 9:03. American Airlines Flight 77 crashed into the western façade of the Pentagon at 9:37. At 9:59, the South Tower of the World Trade Center collapsed. At 10:03, United Airlines Flight 93 crashed in a field outside of Shanksville, Pennsylvania, likely brought down by passengers who had received news of the earlier hijackings. And at 10:28, the North Tower collapsed. In less than two hours, nearly 3,000 Americans had been killed.

Ground Zero six days after the September 11th attacks. Wikimedia, .

The attacks shocked Americans. Bush addressed the nation and assured the country that “The search is underway for those who are behind these evil acts.” At Ground Zero three days later, Bush thanked the first responders. A worker said he couldn’t hear him. “I can hear you,” Bush shouted back, “The rest of the world hears you. And the people who knocked these buildings down will hear all of us soon.”

American intelligence agencies quickly identified the radical Islamic militant group al-Qaeda, led by the wealthy Saudi Osama Bin Laden, as the perpetrators of the attack. Sheltered in Afghanistan by the Taliban, the country’s Islamic government, al-Qaeda was responsible for a 1993 bombing of the World Trade Center and a string of attacks at U.S. embassies and military bases across the world. Bin Laden’s Islamic radicalism and his anti-American aggression attracted supporters across the region and, by 2001, al-Qaeda was active in over sixty countries.

The War on Terror

Although in his campaign Bush had denounced foreign “nation-building,” his administration was populated by “neo-conservatives,” firm believers in the expansion of American democracy and American interests abroad. Bush advanced what was sometimes called the Bush Doctrine, a policy in which the United States would have to the right to unilaterally and pre-emptively make war upon any regime or terrorist organization that posed a threat to the United States or to United States’ citizens. It would lead the United State to protracted conflicts in Afghanistan and Iraq and entangle the United States in nations across the world.

The United States and Afghanistan

The United States had a history in Afghanistan. When the Soviet Union invaded Afghanistan in December 1979 to quell an insurrection that threatened to topple Kabul’s communist government, the United States financed and armed anti-Soviet insurgents, the Mujahedeen. In 1981, the Reagan Administration authorized the Central Intelligence Agency (CIA) to provide the Mujahedeen with weapons and training to strengthen the insurgency. An independent wealthy young Saudi, Osama bin Laden, also fought with and funded the mujahedeen. The insurgents began to win. Afghanistan bled the Soviet Union dry. The costs of the war, coupled with growing instability at home, convinced the Soviets to withdraw from Afghanistan in 1989.

Osama bin Laden relocated al-Qaeda to Afghanistan after the country fell to the Taliban in 1996. The United States under Bill Clinton had launched cruise missiles into Afghanistan at al-Qaeda camps in retaliation for al-Qaeda bombings on American embassies in Africa.

Then, after September 11, with a broad authorization of military force, Bush administration officials made plans for military action against al-Qaeda and the Taliban. What would become the longest war in American history began with the launching of Operation Enduring Freedom in October 2001. Air and missile strikes hit targets across Afghanistan. U.S. Special Forces joined with fighters in the anti-Taliban Northern Alliance. Major Afghan cities fell in quick succession. The capital, Kabul, fell on November 13. Bin Laden and Al-Qaeda operatives retreated into the rugged mountains along the border of Pakistan in eastern Afghanistan. The United States military settled in.

The United States and Iraq

After the conclusion of the Gulf War in 1991, American officials established economic sanctions, weapons inspections, and “no-fly zones” in Iraq. By mid-1991, American warplanes were routinely patrolling Iraqi skies, where they periodically came under fire from Iraqi missile batteries. The overall cost to the United States of maintaining the two no-fly zones over Iraq was roughly $1 billion a year. Related military activities in the region added about another half million to the annual bill. On the ground in Iraq, meanwhile, Iraqi authorities clashed with U.N. weapons inspectors. Iraq had suspended its program for weapons of mass destruction, but Saddam Hussein fostered ambiguity about the weapons in the minds of regional leaders to forestall any possible attacks against Iraq.

In 1998, a standoff between Hussein and the United Nations over weapons inspections led President Bill Clinton to launch punitive strikes aimed at debilitating what was thought to be a fairly developed chemical weapons program. Attacks began on December 16, 1998. More than 200 cruise missiles fired from U.S. Navy warships and Air Force B-52 bombers flew into Iraq, targeting suspected chemical weapons storage facilities, missile batteries and command centers. Airstrikes continued for three more days, unleashing in total 415 cruise missiles and 600 bombs against 97 targets. The amount of bombs dropped was nearly double the amount used in the 1991 conflict.

The United States and Iraq remained at odds throughout the 1990s and early 2000, when Bush administration officials began considering “regime change.” The Bush Administration began publicly denouncing Saddam Hussein’s regime and its alleged weapons of mass destruction. It was alleged that Hussein was trying to acquire uranium and that it had aluminum tubes used for nuclear centrifuges. George W. Bush said in October, “Facing clear evidence of peril, we cannot wait for the final proof—the smoking gun—that could come in the form of a mushroom cloud.” The United States Congress passed the Authorization for Use of Military Force against Iraq Resolution, giving Bush the power to make war in Iraq.

In late 2002 Iraq began cooperating with U.N. weapons inspectors. But the Bush administration pressed on. On February 6, 2003, Secretary of State Colin Powell, who had risen to public prominence as an Army general during the Persian Gulf War in 1991, presented allegations of a robust Iraqi weapons program to the United Nations.

The first American bombs hit Baghdad on March 20, 2003. Several hundred-thousand troops moved into Iraq and Hussein’s regime quickly collapsed. Baghdad fell on April 9. On May 1, 2003, aboard the USS Abraham Lincoln, beneath a banner reading “Mission Accomplished,” George W. Bush announced that “Major combat operations in Iraq have ended.” No evidence of weapons of mass destruction had been found or would be found. And combat operations had not ended, not really. The insurgency had begun, and the United States would spend the next ten years struggling to contain it.

Combat operations in Iraq would continue for years. Wikimedia, http://upload.wikimedia.org/wikipedia/commons/5/50/USS_Abraham_Lincoln_%28CVN-72%29_Mission_Accomplished.jpg.

Efforts by various intelligence gathering agencies led to the capture of Saddam Hussein, hidden in an underground compartment near his hometown, on December 13, 2003. The new Iraqi government found him guilty of crimes against humanity and he was hanged on December 30, 2006.

IV. The End of the Bush Years

The War on Terror was a centerpiece in the race for the White House in 2004. The Democratic ticket, headed by Massachusetts Senator John F. Kerry, a Vietnam War hero who entered the public consciousness for his subsequent testimony against it, attacked Bush for the ongoing inability to contain the Iraqi insurgency or to find weapons of mass destruction, the revelation, and photographic evidence, that American soldiers had abused prisoners at the Abu Ghraib prison outside of Baghdad, and the inability to find Osama Bin Laden. Moreover, many who had been captured in Iraq and Afghanistan were “detained” indefinitely at a military prison in Guantanamo Bay in Cuba. “Gitmo” became infamous for its harsh treatment, indefinite detentions, and the torture of prisoners. Bush defended the War on Terror and his allies attacked critics for failing to support the troops. Moreover, Kerry had voted for the war. He had to attack what had authorized. Bush won a close but clear victory.

The second Bush term saw the continued deterioration of the wars in Iraq and Afghanistan, but Bush’s presidency would take a bigger hit from his perceived failure to respond to the domestic tragedy that followed Hurricane Katrina’s devastating hit on the Gulf Coast. Katrina had been a category 5 hurricane, what New Orleans Mayor Ray Nagin called “the one we always feared.”

New Orleans suffered a direct hit, the levees broke, and the bulk of the city flooded. Thousands of refugees flocked to the Superdome, where supplies and medical treatment and evacuation were slow to come. Individuals were dying in the heat. Bodies wasted away. Americans saw poor black Americans abandoned. Katrina became a symbol of a broken administrative system, a devastated coastline, and irreparable social structures that allowed escape and recovery for some, and not for others. Critics charged that Bush had staffed his administration with incompetent supporters and had further ignored the displaced poor and black residents of New Orleans.

Hurricane Katrina was one of the deadliest and more destructive hurricanes to hit American soil in U.S. history. It nearly destroyed New Orleans, Louisiana, as well as cities, towns, and rural areas across the Gulf Coast. It sent hundreds of thousands of refugees to near-by cities like Houston, Texas, where they temporarily resided in massive structures like the Astrodome. Photograph, September 1, 2005. Wikimedia, http://commons.wikimedia.org/wiki/File:Katrina-14461.jpg.

Immigration had become an increasingly potent political issue. The Clinton Administration had overseen the implementation of several anti-immigration policies on the border, but hunger and poverty were stronger incentives than border enforcement policies. Illegal immigration continued, often at great human cost, but nevertheless fanned widespread anti-immigration sentiment among many American conservatives. Many immigrants and their supporters, however, fought back. 2006 saw waves of massive protests across the country. Hundreds of thousands marched in Chicago, New York, and Los Angeles, and tens of thousands marched in smaller cities around the country. Legal change, however, went nowhere. Moderate conservatives feared upsetting business interests’ demand for cheap, exploitable labor and alienating large voting blocs by stifling immigration and moderate liberals feared upsetting anti-immigrant groups by pushing too hard for liberalization of immigration laws.

Afghanistan and Iraq, meanwhile, continued to deteriorate. In 2006, the Taliban reemerged, as the Afghan Government proved both highly corrupt and highly incapable of providing social services or security for its citizens. Iraq only descended further into chaos.

In 2007, 27,000 additional United States forces deployed to Iraq under the command of General David Petraeus. The effort, “the surge,” employed more sophisticated anti-insurgency strategies and, combined with Sunni moves against the disorder, pacified many of Iraq’s cities and provided cover for the withdrawal of American forces. On December 4, 2008, the Iraqi government approved the U.S.-Iraq Status of Forces Agreement and United States combat forces withdrew from Iraqi cities before June 30, 2009. The last US combat forces left Iraq on December 18, 2011. Violence and instability continued to rock the country.

Opened in 2005, the Islamic Center of America in Dearborn, Michigan, is the largest such religious structure in the United States. Muslims in Dearborn have faced religious and racial prejudice, but the suburb of Detroit continues to be a central meeting-place for American Muslims. Photograph July 8, 2008. Wikimedia.

IV. The Great Recession

The Great Recession began, as most American economic catastrophe’s began, with the bursting of a speculative bubble. Throughout the 1990s and into the new millennium, home prices continued to climb, and financial services firms looked to cash in on what seemed to be a safe but lucrative investment. Especially after the dot-com bubble burst, investors searched for a secure investment that was rooted in clear value and not trendy technological speculation. And what could be more secure than real estate? But mortgage companies began writing increasingly risky loans and then bundling them together and selling them over and over again, sometimes so quickly that it became difficult to determine exactly who owned what. Decades of lax regulation had again enabled risky business practices to dominate the world of American finance. When American homeowners began to default on their loans, the whole system tumbled quickly. Seemingly solid financial services firms disappeared almost overnight. In order to prevent the crisis from spreading, the federal government poured billions of dollars into the industry, propping up hobbled banks. Massive giveaways to bankers created shock waves of resentment throughout the rest of the country. On the Right, conservative members of the Tea Party decried the cronyism of an Obama administration filled with former Wall Street executives. The same energies also motivated the Occupy Wall Street movement, as mostly young left-leaning New Yorkers protesting an American economy that seemed overwhelmingly tilted toward “the one percent.”

IV. The Obama Presidency

By the 2008 election, with Iraq still in chaos, Democrats were ready to embrace the anti-war position and sought a candidate who had consistently opposed military action in Iraq. Senator Barack Obama of Illinois had been a member of the state senate when Congress debated the war actions but he had publicly denounced the war, predicting the sectarian violence that would ensue, and remained critical of the invasion through his 2004 campaign for the U.S. Senate. He began running for president almost immediately after arriving in Washington.

A former law professor and community activist, Obama became the first black candidate to ever capture the nomination of a major political party. During the election, Obama won the support of an increasingly anti-war electorate. Already riding a wave of support, however, Bush’s fragile economy finally collapsed in 2007 and 2008. Bush’s policies were widely blamed, and Obama’s opponent, John McCain, was tied to Bush’s policies. Obama won a convincing victory in the fall and became the nation’s first African American president.

President Obama’s first term was marked by domestic affairs, especially his efforts to combat the Great Recession and to pass a national healthcare law. Obama came into office as the economy continued to deteriorate. He managed the bank bailout begun under his predecessor and launched a limited economic stimulus plan to provide countercyclical government spending to spare the country from the worst of the downturn.

Obama’s most substantive legislative achievement proved to be a national healthcare law, the Patient Protection and Affordable Care Act, but typically “Obamacare” by opponents and supporters like. The plan, narrowly passed by Congress, would require all Americans to provide proof of a health insurance plan that measured up to government-established standards. Those who did not purchase a plan would pay a penalty tax, and those who could not afford insurance would be eligible for federal subsidies.

Nationally, as prejudices against homosexuality fell and support for gay marriage reached a majority of the population, the Obama administration moved tentatively. Refusing to push for national interventions on the gay marriage front, Obama did, however, direct a review of Defense Department policies that repealed the “Don’t Ask, Don’t Tell” policy in 2011.

In 2009, President Barack Obama deployed 17,000 additional troops to Afghanistan as part of a counterinsurgency campaign that aimed to “disrupt, dismantle, and defeat” al-Qaeda and the Taliban. Meanwhile, U.S. Special Forces and CIA drones targeted al-Qaeda and Taliban leaders. In May 2011, U.S. Navy SEALs conducted a raid deep into Pakistan that led to the killing of Osama bin Laden. The United States and NATO began a phased withdrawal from Afghanistan in 2011, with an aim of removing all combat troops by 2014. Although weak militarily, the Taliban remained politically influential in south and eastern Afghanistan. Al-Qaeda remained active in Pakistan, but shifted its bases to Yemen and the Horn of Africa. As of December 2013, the war in Afghanistan had claimed the lives of 3,397 U.S. service members.

These former Taliban fighters surrendered their arms to the government of the Islamic Republic of Afghanistan during a reintegration ceremony at the provincial governor’s compound in May 2012. Wikimedia, http://commons.wikimedia.org/wiki/File:Former_Taliban_fighters_return_arms.jpg.

Climate change, the role of government, gay marriage, the legalization of marijuana, the rise of China, inequality, surveillance, a stagnant economy, and a host of other issues have confronted recent Americans with sustained urgency.

In 2012, Barack Obama won a second term by defeating Republican Mitt Romney, the former governor of Massachusetts. However, Obama’s inability to pass legislation and the ascendancy of Tea Party Republicans effectively shut down partisan cooperation and stunted the passage of meaningful legislation. Obama was a lame duck before he ever won reelection. Half-hearted efforts to address climate change, for instance, went nowhere. The economy continued its half-hearted recovery. While corporate profits climbed unemployment continued to sag. The Obama administration campaigned on little to address the crisis and accomplished far less.

IV. New Horizons

Much public commentary in the early twenty-first century concerned the “millennials,” the new generation that had come of age in the new millennium. Commentators, demographers, and political prognosticators continue to ask what the new generation will bring. Pollsters have found certain features that distinguish the millennials from older Americans. They are, the pollsters say, more diverse, more liberal, less religious, and wracked by economic insecurity.

Millennial attitudes toward homosexuality and gay marriage reflect one of the most dramatic changes in popular attitudes toward recent years. After decades of advocacy, attitudes over the past two decades have shifted rapidly. Gay characters–and characters with depth and complexity–can be found across the cultural landscape and, while national politicians have refused to advocate for it, a majority of Americans now favor the legalization of gay marriage.

Even as anti-immigrant initiatives like California’s Proposition 187 (1994) and Arizona’s SB1070 (2010) reflected the anxieties of many, younger Americans proved far more comfortable with immigration and diversity–which makes sense, given that they are the most diverse American generation in living memory. Since Lyndon Johnson’s Great Society liberalized immigration laws, the demographics of the United States have been transformed. In 2012, nearly one-quarter of all Americans were immigrants or the sons and daughters of immigrants. Half came from Latin America. The ongoing “Hispanicization” of the United States and the ever shrinking proportion of non-Hispanic whites have been the most talked about trends among demographic observers. By 2013, 17% of the nation was Hispanic. In 2014, Latinos surpassed non-Latino whites to became the largest ethnic group in California. In Texas, the image of a white cowboy hardly captures the demographics of a “minority-majority” state in which Hispanic Texans will soon become the largest ethnic group. For the nearly 1.5 million people of Texas’s Rio Grande Valley, for instance, where a majority of residents speak Spanish at home, a full three-fourths of the population is bilingual. Political commentators often wonder what political transformations these populations will bring about when they come of age and begin voting in larger numbers.

Younger Americans are also more concerned about the environment and climate change, and yet, on that front, little has changed. In the 1970s and 1980s, experts substantiated the theory of anthropogenic (human-caused) global warming. Eventually, the most influential of these panels, the UN’s Intergovernmental Panel on Climate Change (IPCC) concluded in 1995 that there was a “discernable human influence on global climate.” This conclusion, though stated conservatively, was by that point essentially a scientific consensus. By 2007, the IPCC considered the evidence “unequivocal” and warned that “unmitigated climate change would, in the long term, be likely to exceed the capacity of natural, managed and human systems to adapt.”

Climate change became a permanent and major topic of public discussion and policy in the twenty-first century. Fueled by popular coverage, most notably, perhaps, the documentary An Inconvenient Truth, based on Al Gore’s book and presentations of the same name, climate change entered much of the American left. And yet American public opinion and political action still lagged far behind the scientific consensus on the dangers of global warming. Conservative politicians, conservative, think tanks, and energy companies waged war against to sow questions in the minds of Americans, who remain divided on the question, and so many others.

Much of the resistance to addressing climate change is economic. As Americans look over their shoulder at China, many refuse to sacrifice immediate economic growth for long-term environmental security. Twenty-first century relations with China are characterized by contradictions and interdependence. After the collapse of the Soviet Union, China reinvigorated its efforts to modernize its country. By liberating and subsidizing much of its economy and drawing enormous foreign investments, China has posted enormous growth rates during the last several decades. Enormous cities rise by the day. In 2000 China had a gross domestic product around an eighth the size of the United States. Based on growth rates and trends, analysts suggest that China’s economy will bypass the United States’ soon. American concerns about China’s political system have persisted, but money sometimes speaks matters more to Americans. China has become one of the country’s leading trade partners. Cultural exchange has increased, and more and more Americans visit China each year, with many settling down to work and study. Conflict between the two societies is not inevitable, but managing bilateral relations will be one of the great challenges of the next decade. It is but one of several aspects of the world confronting Americans of the twenty-first century.

V. Conclusion

The collapse of the Soviet Union brought neither global peace nor stability and the later attacks of September 11, 2001 plunged the United States into interminable conflicts around the world. At home, economic recession, entrenched joblessness, and general pessimism infected American life as contentious politics and cultural divisions poisoned social harmony. But trends shift, things change, and history turns. A new generation of Americans look to the future with uncertainty.

This chapter was edited by Michael Hammond, with content contributions by Eladio Bobadilla, Andrew Chadwick, Zach Fredman, Leif Fredrickson, Michael Hammond, Richara Hayward, Joseph Locke, Mark Kukis, Shaul Mitelpunkt, Michelle Reeves, Elizabeth Skilton, Bill Speer, and Ben Wright.

![Jesse Jackson was only the second African American to mount a national campaign for the presidency. His work as a civil rights activist and Baptist minister garnered him a significant following in the African American community, but never enough to secure the Democratic nomination. His Warren K. Leffler, “IVU w/ [i.e., interview with] Rev. Jesse Jackson,” July 1, 1983. Library of Congress, http://www.loc.gov/pictures/item/2003688127/.](http://www.americanyawp.com/text/wp-content/uploads/01277v-983x1500.jpg)

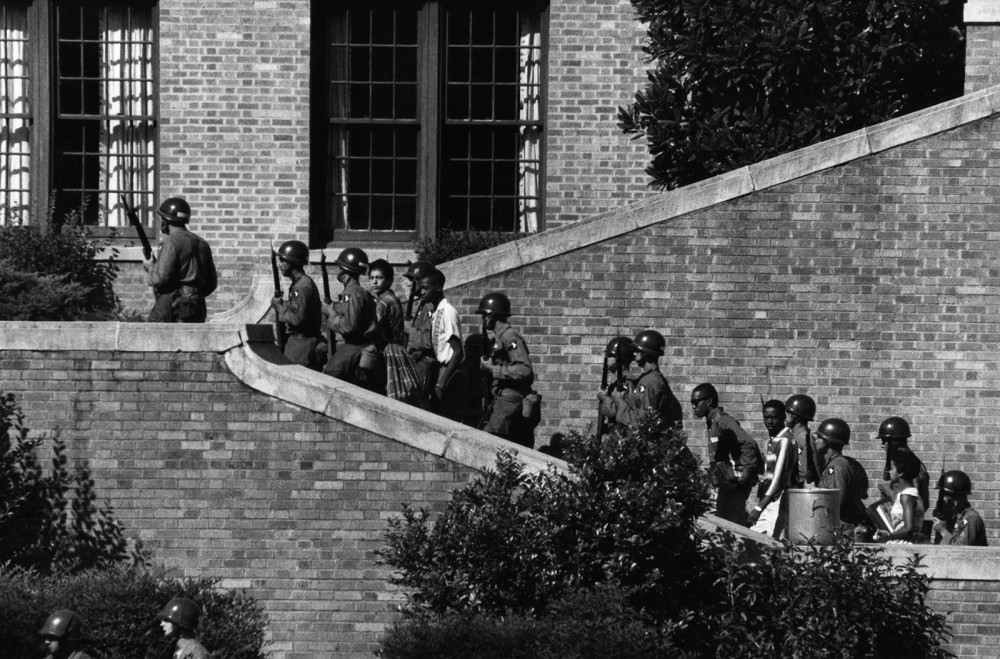

![James Meredith, accompanied by U.S. Marshalls, walks to class at the University of Mississippi in 1962. Meredith was the first African-American student admitted to the still segregated Ole Miss. Marion S. Trikosko, “Integration at Ole Miss[issippi] Univ[ersity],” 1962. Library of Congress, http://www.loc.gov/pictures/item/2003688159/.](http://www.americanyawp.com/text/wp-content/uploads/04292v-1000x653.jpg)

![Like Booker T. Washington and W.E.B. Du Bois before them, Martin Luther King, Jr., and Malcolm X represented two styles of racial uplift while maintaining the same general goal of ending racial discrimination. How they would get to that goal is where the men diverged. Marion S. Trikosko, “[Martin Luther King and Malcolm X waiting for press conference],” March 26, 1964. Library of Congress, http://www.loc.gov/pictures/item/92522562/.](http://www.americanyawp.com/text/wp-content/uploads/3d01847v-1000x651.jpg)

![The women’s movement stagnated after gaining the vote in 1920, but by the 1960s it was back in full force. Inspired by the Civil Rights Movement and fed up with gender discrimination, women took to the streets to demand their rights as American citizens. Warren K. Leffler, “Women's lib[eration] march from Farrugut Sq[uare] to Layfette [i.e., Lafayette] P[ar]k,” August 26, 1970. Library of Congress, http://www.loc.gov/pictures/item/2003673992/.](http://www.americanyawp.com/text/wp-content/uploads/03425v-1000x675.jpg)

![Alabama governor George Wallace stands defiantly at the door of the University of Alabama, blocking the attempted integration of the school. Wallace was perhaps the most notoriously pro-segregation politician of the 1960s, proudly proclaiming in his 1963 inaugural address “segregation now, segregation tomorrow, segregation forever.” Warren K. Leffler, “[Governor George Wallace attempting to block integration at the University of Alabama],” June 11, 1963. Library of Congress, http://www.loc.gov/pictures/item/2003688161/.](http://www.americanyawp.com/text/wp-content/uploads/04294v1-1000x666.jpg)

![Advertising began creeping up everywhere in the 1950s. No longer confined to commercials or newspapers, advertisements were subtly (or not so subtly in this case) worked into TV shows like the Quiz Show “21”. (Geritol is a dietary supplement.) Orlando Fernandez, “[Quiz show "21" host Jack Barry turns toward contestant Charles Van Doren as fellow contestant Vivienne Nearine looks on],” 1957. Library of Congress, http://www.loc.gov/pictures/item/00652124/.](http://www.americanyawp.com/text/wp-content/uploads/3c26813v-1000x814.jpg)

![The environment of fear and panic instigated by McCarthyism led to the arrest of many innocent people. Still, some Americans accused of supplying top-secret information to the Soviets were in fact spies. The Rosenbergs were convicted of espionage and executed in 1953 for giving information about the atomic bomb to the Soviets. This was one case that has proven the test of time, for as recently as 2008 a co-conspirator of the Rosenbergs admitted to spying for the Soviet Union. Roger Higgins, “[Julius and Ethel Rosenberg, separated by heavy wire screen as they leave U.S. Court House after being found guilty by jury],” 1951. Library of Congress, http://www.loc.gov/pictures/item/97503499/.](http://www.americanyawp.com/text/wp-content/uploads/3c17772v-500x633.jpg)

![The German aerial bombing of London left thousands homeless, hurt, or dead. This child sits among the rubble with a rather quizzical look on his face, as adults ponder their fate in the background. Toni Frissell, “[Abandoned boy holding a stuffed toy animal amid ruins following German aerial bombing of London],” 1945. Library of Congress, http://www.loc.gov/pictures/item/2008680191/.](http://www.americanyawp.com/text/wp-content/uploads/19004v-1000x1030.jpg)

![During the 1920s, the National Women’s Party fought for the rights of women beyond that of suffrage, which they had secured through the 19th Amendment in 1920. They organized private events, like the tea party pictured here, and public campaigning, such as the introduction of the Equal Rights Amendment to Congress, as they continued the struggle for equality. “Reception tea at the National Womens [i.e., Woman's] Party to Alice Brady, famous film star and one of the organizers of the party,” April 5, 1923. Library of Congress, http://www.loc.gov/pictures/item/91705244/.](http://www.americanyawp.com/text/wp-content/uploads/3c02300v-1000x795.jpg)

![Babe Ruth’s incredible talent attracted widespread attention to the sport of baseball, helping it become America’s favorite pastime. Ruth’s propensity to shatter records with the swing of his bat made him a national hero during a period when defying conventions was the popular thing to do. “[Babe Ruth, full-length portrait, standing, facing slightly left, in baseball uniform, holding baseball bat],” c. 1920. Library of Congress, http://www.loc.gov/pictures/item/92507380/.](http://www.americanyawp.com/text/wp-content/uploads/3g07246v-500x632.jpg)